I MAGA-fied a Fresh TikTok in 12 Hours. By Day Two, It Was Serving Me White Supremacy

Inside a 24-Hour descent into TikTok’s far-right pipeline.

A few weeks ago, I spun up a brand-new TikTok account – no follows, no history, no politics – and gave the For You Page exactly what it craves: attention. I lingered on a few culture-war clips and tapped like on the first right-wing videos that drifted in. Within 12 hours, my feed was red hats and grievances. Within 24 hours, TikTok was slotting in open white-supremacist tropes: “great replacement” riffs, fascist aesthetics, and accounts laundered through ironic memes. I didn’t hunt for any of it. The app brought it to me – fast.

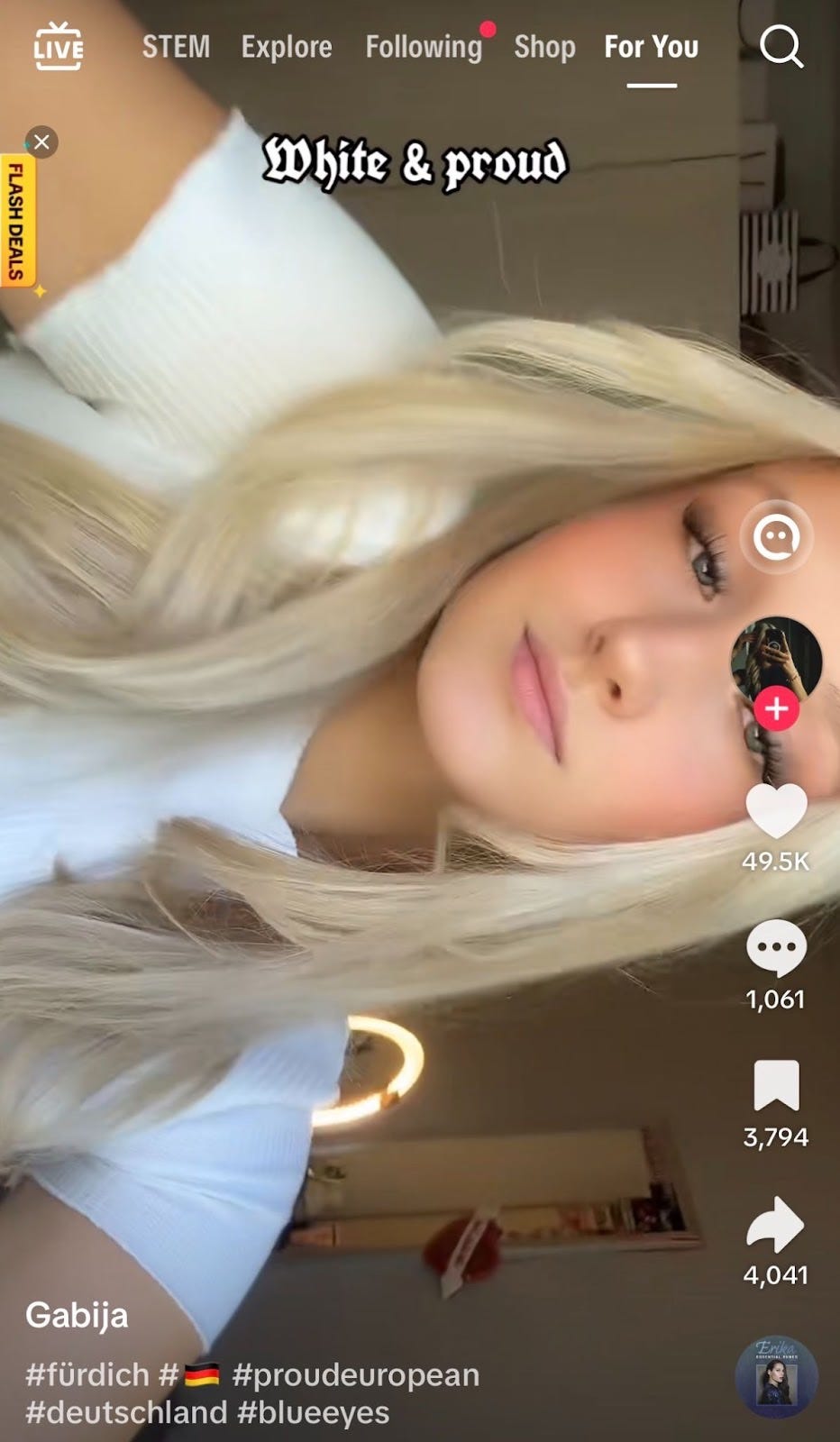

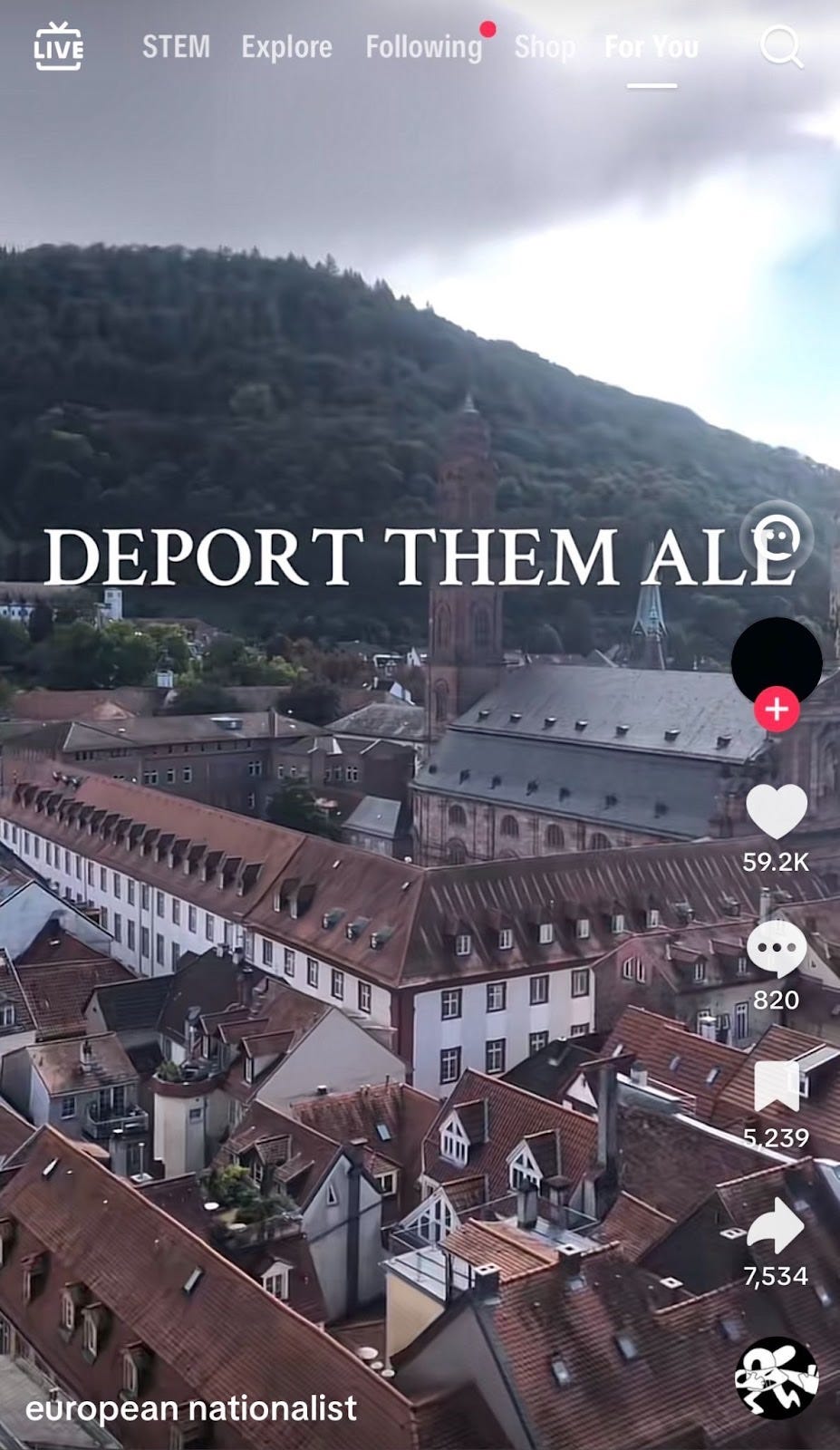

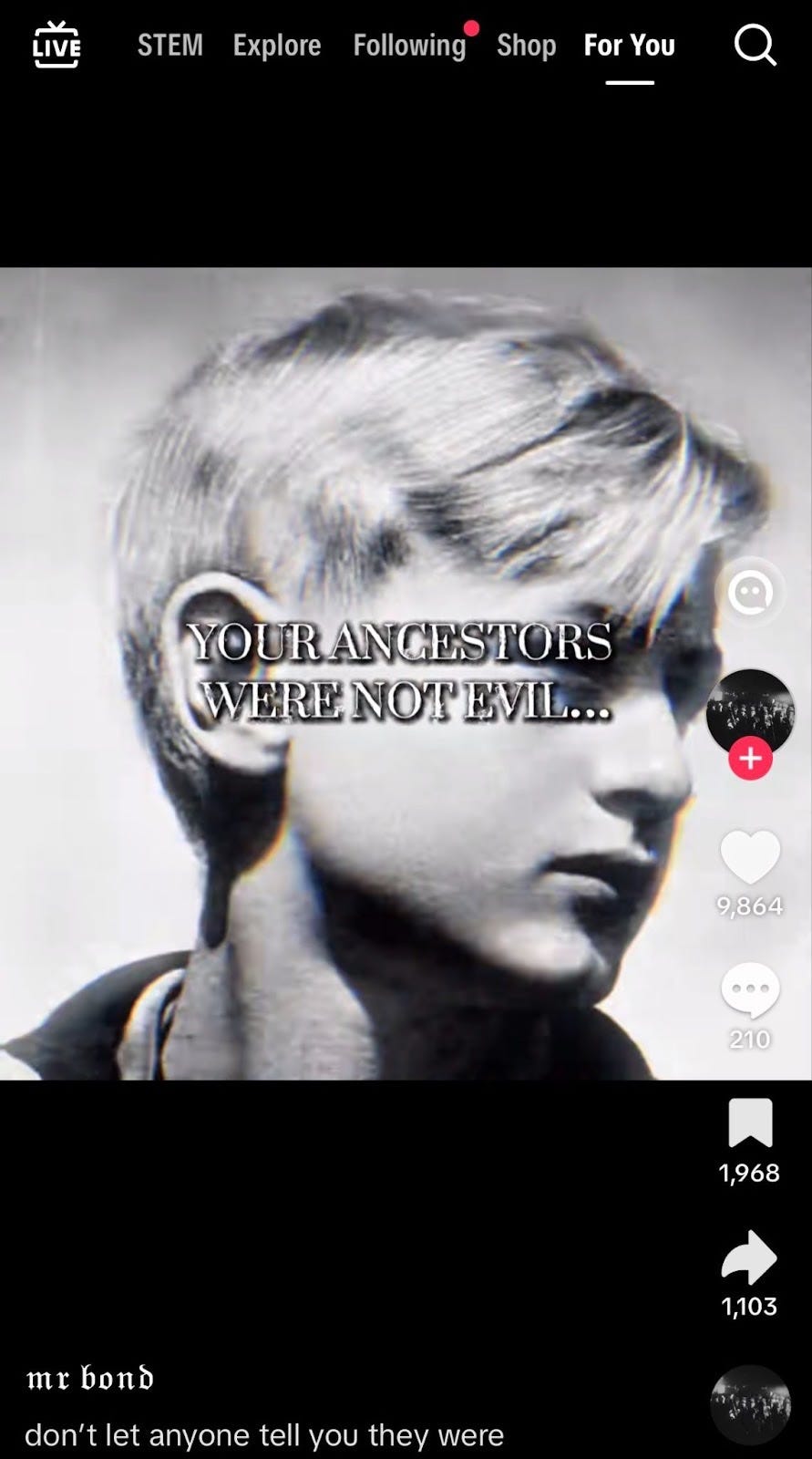

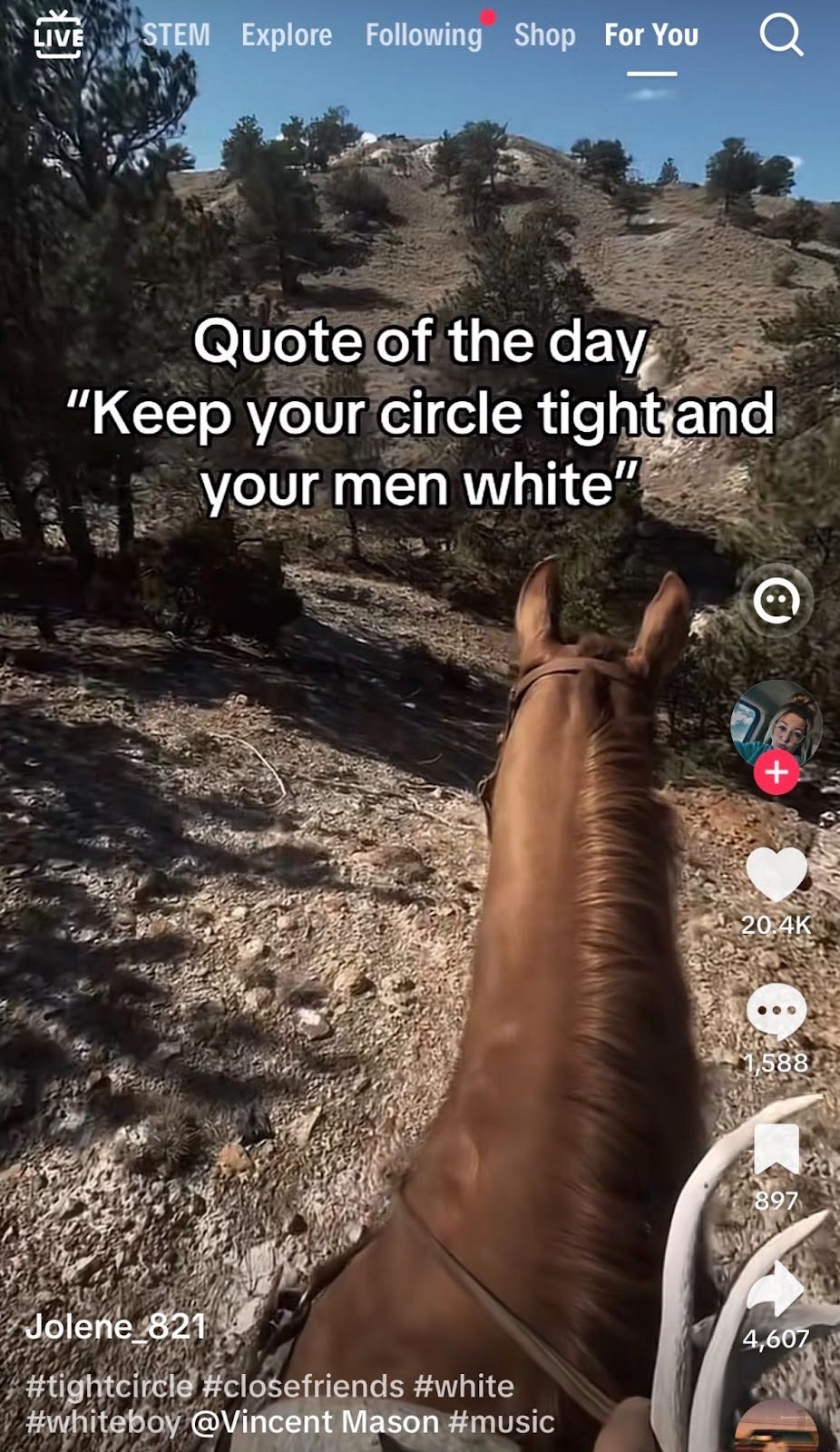

At first, it felt almost silly. The early “MAGA-fied” clips were mostly conventionally attractive women talking about “real men being MAGA,” or meme-ified Trump edits meant to look rebellious and cool. But then the tone began to darken. The feed began to surface other videos, each a little more brazen than the last: a peaceful nature landscape with text that read “this is what it’s like to live in a white neighborhood,” another post insisting “white people do have culture” before flashing quick edits from pictures of Roman statues to videos of Nazi marches, another playing an AI-translated Hitler speech. All with hundreds of thousands of likes on them.

What I experienced is not a glitch.

This is how engagement-optimized feeds are supposed to work. A growing pile of investigations shows TikTok’s recommender is unusually sensitive to small signals – watch time, a tap, even just not swiping away – and will lock onto a vein of content and keep drilling, often with steadily more extreme videos.

In The Guardian’s test, a blank account that lingered on a conservative pastor’s videos quickly turned into a conservative-Christian, anti-immigrant, anti-LGBTQ feed – without a single follow or like. Media Matters found that an account interacting only with transphobic clips was soon flooded with misogyny, racism, antisemitic conspiracies, and coded calls to violence. Their blunt summary: a user could download the app at breakfast and be fed neo-Nazi content before lunch.

A University College London experiment saw TikTok’s teen “archetype” accounts shift from self-improvement to misogyny within five days, while a BBC Panorama report described feeds of teenage boys packed with violence and hate that human moderators never saw until tens of thousands of views.

These same mechanics drive every major platform.

And it’s worth noting who profits from that engagement: most of these platforms are controlled by right-wing or libertarian billionaires whose politics align neatly with the outrage their products reward.

Why does my 24-hour descent matter beyond one feed? Because feeds are political infrastructure now. If the on-ramp into politics for millions of young people is a for-profit slot machine that learns “more extreme = more attention,” then “accidental” radicalization is a design choice. TikTok will say users can long-press “Not interested,” and YouTube will point to “Dislike” or “Don’t recommend this channel.” But Mozilla’s testing found those controls often barely dent the recommendation stream; the river keeps flowing. And as more and more reports keep showing, even passive behavior – simply lingering – can be enough to tip an account into a spiral.

So what can we do that actually works to end this algorithmic spiral?

Treat recommender systems like public-impact infrastructure. Require independent audits and real researcher access to measure ideological skew and escalation pathways in the same way we audit food safety or emissions. (Twitter’s 2021 study is the exception that proves the rule; most platforms still wall off the data.)

Create a real “circuit breaker” for escalation. If a fresh or teen account’s feed intensity spikes within hours – e.g., quickly clustering around guns, race, gender, or conspiracies – platforms should interrupt the loop: mix in counterspeech and reputable context by default, slow the cadence, and surface a reset feed option prominently (not buried in settings). TikTok already admits the FYP will show “more of what you engage with”; it can also learn to pace it.

Default minors to safer modes. For under-18s: no political recommendations by default; no off-platform funneling; stronger topic diversity; friction for accounts repeatedly recommending borderline content. Previous reporting makes clear that “not interested” alone isn’t enough protection.

Give users real brakes. Negative feedback should actually work. Mozilla found YouTube’s tools often don’t. Mandate usable controls: block by topic cluster, not just a single channel; show why something was recommended; one-tap to “turn off political recs” platform-wide.

Stop laundering hate through “soft” gateways. TikTok’s own rabbit-hole tests show how quickly transphobic “jokes” or “just asking questions” conspiracies lead to white-supremacist content. A platform that can detect a trending dance can detect dog-whistles – and down-rank them.

I’m a progressive strategist. I’ve knocked thousands of doors, cut hundreds of political ads, and watched rooms full of neighbors disagree without hating each other. Doing that work taught me something simple: most people don’t learn politics in town halls or cable news anymore – they learn it from their feeds. And the problem isn’t that politics moved online; it’s that an advertising machine took the place of civic life, and its only lesson plan is whatever keeps you scrolling.

I could MAGA-fy a clean TikTok in half a day and arrive at white-supremacist material by the next – because that’s how the product is designed to perform.

Clearly, this is an uphill battle. But if we don’t find ways to push back – through regulation, design reform, or plain public pressure – the pipeline only gets faster. So I’ll end with a question: what else can we do to break the cycle? I’d love to hear ideas, because that’s the conversation we need to start having.